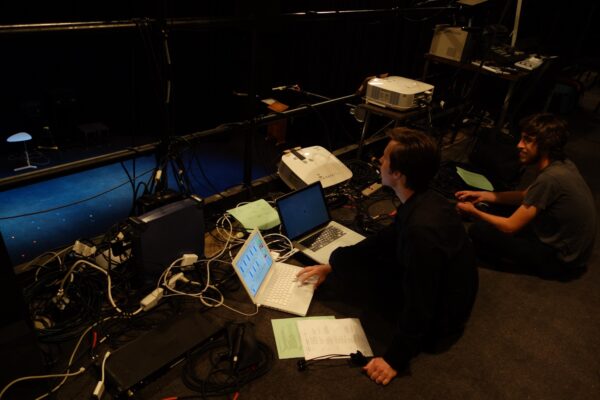

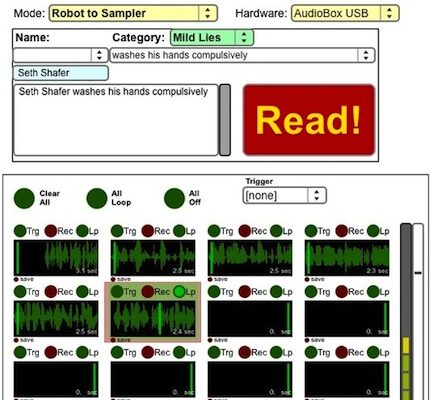

General Description

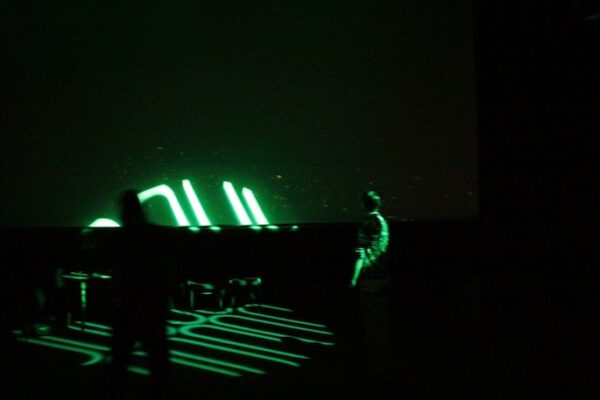

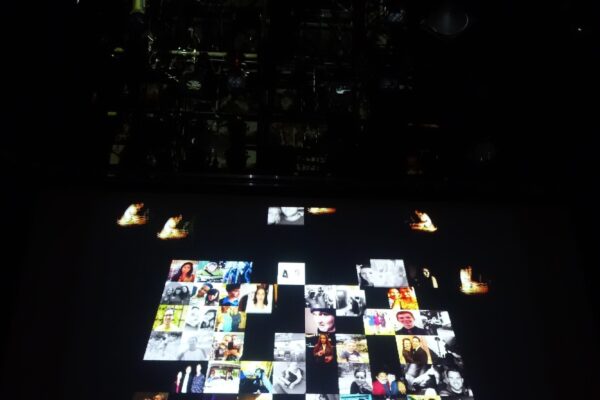

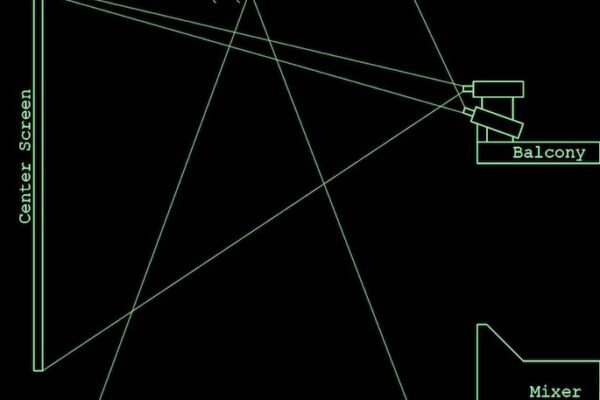

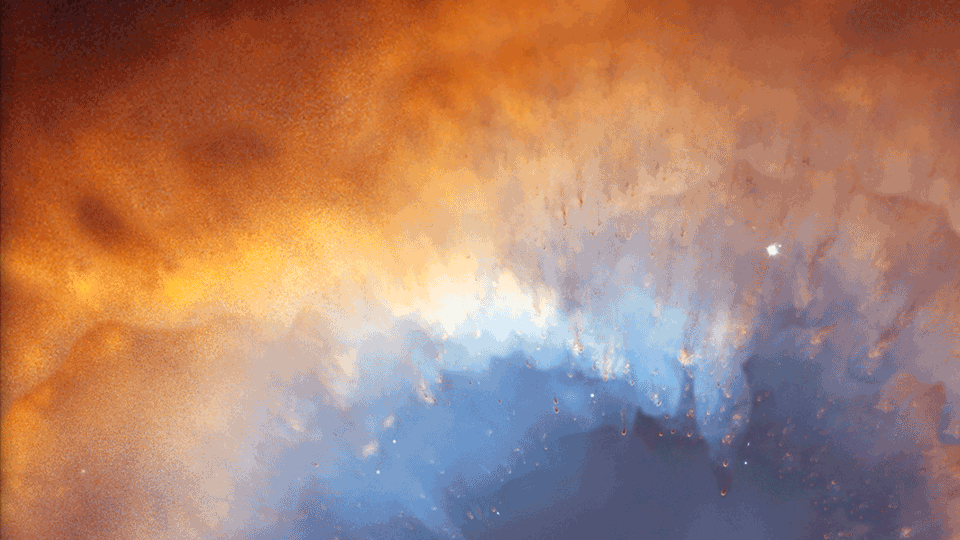

WHATAREYOULOOKINGAT is an audience-involved, interactive meta-instrument for data sonification. The overarching goal of the performance is to extract data from the audience emulating a type of government-sanctioned privacy infringement, manipulate and share the data between the three primary performers, and present that data in the form of surround sound audio and interactive, three-channel video projection.

Year

2014